This is an update to http://github.com/faceit_live using [first order model](https://github.com/AliaksandrSiarohin/first-order-model) by Aliaksandr Siarohin to generate the images. This model only requires a single image, so no training is needed and things are much easier. I've included instructions on how to set it up under **Windows 10** and **Linux**.

Don't forget to use the *--recurse-submodules* parameter to checkout all dependencies. In Windows you might need to install a [Git Client](https://git-scm.com/download/win).

## Download 'vox-adv-cpk.pth.tar' to /model folder

You can find it at: [google-drive](https://drive.google.com/open?id=1PyQJmkdCsAkOYwUyaj_l-l0as-iLDgeH) or [yandex-disk](https://yadi.sk/d/lEw8uRm140L_eQ).

# Install Nvidia Deep Learning Drivers / Libs

Install the latest Nvidia video driver then the Deep Learning infrastructure:

* [cuDNN](https://developer.nvidia.com/cudnn) version for CUDA 10.1 - you will need to register to download it.

Other versions might work, but I haven't tested them.

## Usage

Put in the `/media` directory the images in jpg/png you want to play with. Squared images that have just a face filling most of the space will work better.

# Setup Windows Version

## Create an Anaconda environment and install requirements

Download [OBS Studio for Win](https://obsproject.com/download) and install it, afterwards install the [OBS Virtual CAM plugin](https://github.com/CatxFish/obs-virtual-cam/releases) by following instructions on the page.

After you install Virtual CAM.

- Create a Scene

- Add a Window Capture item to Sources and select the "Stream Window"

- Add a Filter to the Window Capture by right clicking and selecting Filters, then "+" and choose Virtual CAM

- Start the Virtual CAM from the Tools Menu

[]

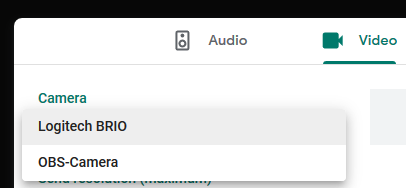

Open Firefox and joing Google Hangout to test it, don't forget to choose the OBS CAM from the camera options under settings.

[]

# Setup Linux Version

## Create an Anaconda environment and install requirements

To use the fake webcam feature to enter conferences with our stream we need to insert the **v4l2loopback** kernel module in order to create */dev/video1*. Follow the install instructions at (https://github.com/umlaeute/v4l2loopback), then let's setup our fake webcam:

On Ubuntu 18, I had to make a minor change to the source code of v4l2loopback.c to get loopback working. In case the above doesn't work, you can try this change before running *make* :

For better results, look into the webcam when starting the program or when pressing C, as this will create a base image from your face that is used for the transformation. Move away and closer to the webcam to find the ideal distance for better results.

## Troubleshooting

### Slow

If it is running slow, check that it is running on the GPU by looking at the TASK MANAGER under Windows and NVidia Control Panel for Linux.

### Multiple GPU

If you have more than one GPU, you might need to set some environment variables:

```

# specify which display to use for rendering (Linux)

$ export DISPLAY=:1

# which CUDA DEVICE to use (run nvidia-smi to discover the ID)